On August 8th, the 2025 World Robot Conference (WRC) officially kicked off at the Beijing Etrong International Exhibition & Convention Center.

This year’s conference, themed ‘Making Robots Smarter, Making Embodied Entities More Intelligent’, features three major pavilions: the Innovation Pavilion, the Application Pavilion, and the Technology Pavilion. Over 200 domestic and foreign robotics enterprises are participating, including more than 50 humanoid robot body enterprises. They are showcasing over 1,500 exhibits and more than 100 newly launched products such as robots, sensors, dexterous hands, and miniature planetary roller screws.

As a participating media outlet, Edward@apfiti.com conducted an on-site exploration of the conference on the opening day of WRC.

The pavilions were packed with people. From core components to complete machine applications, and even embodied large models, everything was available. Various performances and lively cries filled the air. ‘What kind of work can it do?’, ‘When can it be put into use?’, ‘What’s the price?’ were the most common questions we heard from the audience.

After a difficult day of navigating through the ‘crowds of people’, our deepest feeling is that this is not just a technology feast, but also a collective calibration of industrial trends.

From LiDAR manufacturers seeking new growth curves, to humanoid robots moving from show stages to factories, and to the deep integration of bionic technology and embodied intelligent models, the robotics industry is collectively bidding farewell to the ‘tech showoff’ era and sprinting towards the ‘actual combat’ stage.

After a day of exploring the exhibition, we have also summarized the four major trends in the robotics industry. These four trends not only allow us to see the dawn before the commercialization of robots, but also heralds an era where robots truly participate in production and life is coming.

Trend 1: LiDAR Manufacturers Attempting to Achieve New Business Growth in the Robotics Industry

At the 2025 World Robot Conference, LiDAR manufacturers such as Wuhan Hesai, Hesai Technology,and RoboSenseall made appearances and showcased their flagship products. The participation of leading industry players like Hesai Technology and RoboSense also means that LiDAR is undergoing an industrial shift from being a ‘standard configuration for new energy vehicles’ to a ‘standard configuration for robots.

Behind this move is the collective bet of LiDAR manufacturers on a ‘second growth curve’.

The robotics industry has developed rapidly in recent years and is on the eve of large-scale industrialization. The Ministry of Industry and Information Technology’s ‘Guiding Opinions on the Innovative Development of Humanoid Robots’ clearly states that the annual output of domestic complete machines should reach 1 million units by 2027, and a complete industrial chain should be formed by 2030. According to market development logic, the next 5 to 10 years will be a window period for the explosion of the robotics industry.

As a high-precision sensor, LiDAR has already been verified in terms of product performance and industrial applications in the market. Naturally, LiDAR manufacturers will not give up this emerging market and hope to ride the wave of the industry to achieve a new round of development.

Qiu Chunchao, CEO of RoboSense,once publicly stated: ‘The robot is a very large application scenario, 10 times that of the automotive market.’

On the other hand, with the maturity of LiDAR technology and the reduction of costs, competition in the new energy vehicle market has become fierce. Currently, the change that new energy vehicles have begun to be equipped with LiDAR in batches has not brought substantial profits to LiDAR manufacturers.

For example, Hesai Technology’s gross profit margin dropped from 70.3% to 35.2% from 2019 to 2023, and rebounded to 42.6% in 2024, but its profitability is still challenged. At the same time, the involution in the automotive industry has made the cost and price of LiDAR almost transparent targets, which has exacerbated the profit shrinkage of suppliers.

However, the robotics industry is still in the early stage of development, with an imperfect supply chain, and the performance and price of components have not yet entered a white-hot competition. The relatively initial market is conducive for LiDAR manufacturers to quickly increase their market share and expand profits through products.

GAC Group Enters the Robotics Industry with Intelligent Wheelchairs

At the 2025 World Robot Conference, GAC Group was the only automaker participating. Different from other main engine manufacturers or component suppliers that develop robot businesses through investment or cooperation, GAC Group’s approach is very direct – directly manufacturing robots.

GAC Group showcased a variety of robot products, including the third-generation embodied intelligent humanoid robot GoMate. It is a full-size humanoid robot with a two-wheel standing height of up to 1.75 meters and 38 degrees of freedom in the whole body. It adopts a variable wheel-leg structure, with a maximum moving speed of 15km/h, and can switch between two-wheel-leg and four-wheel-leg modes, with all-terrain mobility. A GAC Group staff member explained to Edward@apfiti.com that this model of robot will be mainly used in the security field.

But the most eye-catching is an intelligent electric wheelchair launched by GAC Group, which the official calls the embodied intelligent manned wheel-leg robot GoMove. It is understood that this robot can carry people up and down stairs, with a maximum load of 90 kilograms.

This is not GAC Group’s first attempt at electric wheelchair products. In March 2024, the GAC Trumpchi E9 electric welfare version was launched, equipped with a ‘welfare seat’. It is installed on the right side of the middle row of the Trumpchi E9, can rotate out of the vehicle and lower the height, facilitating people with mobility difficulties to get on and off the car. At the same time, the seat is equipped with a battery, and after landing, it can be separated from the mechanism to become an electric wheelchair for use.

But what’s different is that this wheel-set robot has the ability to set destinations and drive automatically, making it more like an autonomous driving robot in special scenarios.

Currently, the above-mentioned products have not been officially launched, but their research and development as well as production are all completed by GAC Group. Although there is a large difference from the automotive business, GAC Group has not split off the robot business. Edward@apfiti.com learned from on-site staff that there is a possibility of splitting into an independent entity in the future.

When Edward@apfiti.com asked why GAC Group directly engaged in R&D of products in the robot field, the GAC staff replied directly: ‘Because the robotics industry is a hot trend.’

Hesai Technology: Miniature LiDAR ‘Mounted on Dogs’

As the world’s first and so far the only listed LiDAR company to achieve annual profitability, Hesai Technology is striving to expand its business boundaries. When new energy vehicles have begun to use LiDAR as a standard configuration, and the price range of equipped models has been falling, the robotics industry is the next market that Hesai Technology is focusing on.

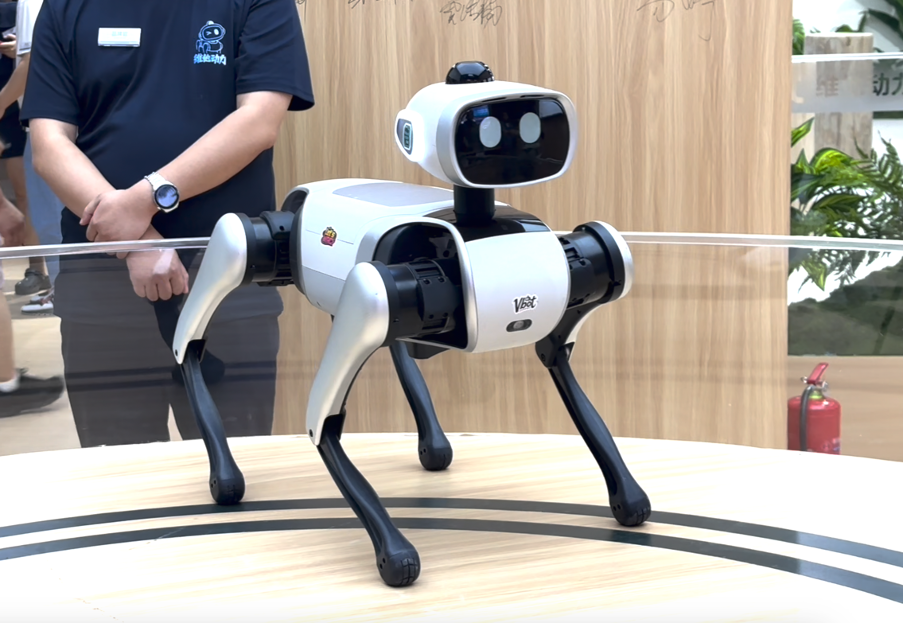

At this World Robot Conference, Hesai Technology exhibited the JT128 miniature ultra-hemispherical 3D LiDAR, which was mounted on the companion robot dog developed by Vita Dynamics.

The JT series LiDAR first appeared at the end of 2024 and was displayed at CES 2025. This series of radars is very small in size, with a rear window exposure height of only 30mm after embedded installation, but has a 360°x187° field of view and supports up to 128 channels. At the robot conference site, when the robot dog looked up, the LiDAR on its head was almost invisible from the head up angle,but the surrounding radar dot matrix was still very clear.

Edward@apfiti.com learned from Hesai Technology staff that the JT LiDAR currently mounted on Vita Dynamics’ companion robot dog is a 16-channel LiDAR with a mechanical rotation structure.

This seemingly ‘old-fashioned’ configuration actually has multiple considerations. On one hand, the main movement needs of companion robot dogs are low-intensity movements such as following and obstacle avoidance, and low-channel configuration can well save costs. Hesai Technology told Edward@apfiti.com: ‘If we equip this robot dog with a radar with more than 100 channels, it will have to be sold for tens of thousands of RMB, which is not conducive to market promotion and unnecessary.’

On the other hand, the mechanical rotation structure also has strong stability. When the robotics industry does not have a perfect industrial chain and industry standards, product stability will help suppliers reach long-term cooperation with enterprises and further seize the market.

Of course, the JT series LiDAR also has high-configuration products, which will be applied to robots in scenarios such as mobile, distribution, cleaning, and industrial production.

Trend 2: Humanoid Robots Shift from Showoff to ‘Really Getting Work Done’

At WRC2025, Edward@apfiti.com observed that robots no longer just display complex movements or forms, but focus on emphasizing their ability to ‘really get work done’ in specific scenarios. For example, Magic Atom’s ‘Xiaomai’, Kepler K2’s logistics sorting, Leju’s ‘Kuafu’ industrial handling, and Keenon’s ‘position-oriented’ service robots all take actual work efficiency and stability as their main promotional points.

Commercialization in Industrial Scenarios Begins to Accelerate

LEJU Robotics has deep roots in industrial scenarios, and its ‘KUAVO’ robot demonstrated a series of complex industrial operations on site, including industrial bin handling, precision material sorting, SMT tray outbound, etc.

Although industrial automation is the general trend, how robots can truly understand and efficiently perform complex industrial tasks has always been a core problem in the industry. In the traditional mode, robots start to ‘explore’ and learn after being deployed in factories, which is not only inefficient but also has high trial-and-error costs.

In response to this, LEJU Robotics provides a breakthrough solution: establishing a ‘robot training ground’.

In this highly simulated environment of the training ground, robots can deeply learn and internalize every link such as material sorting, explanation and demonstration, logistics distribution through a large number of practical trainings. This systematic ‘further study’ ensures that when they enter the actual factory production line, they can complete work with higher efficiency and accuracy.

According to on-site staff, as of the first half of this year, the total shipment volume of LEJU Robotics has reached 600-700 units.

Magic Atom brought their robot family to this WRC with great sincerity, including the full-size general-purpose biped humanoid robot ‘Xiaomai’, the high-dynamic biped humanoid robot MagicBot Z1, the consumer-grade quadruped robot MagicDog, the wheeled quadruped robot MagicDog-W, and the industrial quadruped robot MagicDog Y1 that made its debut.

Similarly, each product line is pursuing practicality.

‘Xiaomai’ MagicBot Gen1 is 1.74 meters tall and realistically reproduced the ‘glue dispensing scenario’ on the industrial assembly line. It can complete glue dispensing operations accurately and quickly, and even can ‘work with both hands’ at the same time. A set of operations only takes 20 seconds, and it can work continuously for more than 4 hours, demonstrating the potential of large-scale entry of robots into industrial scenarios.

In addition to humanoid robots, Magic Atom also launched the quadruped robot MagicDog Y1 specially built for harsh industrial environments. This industrial-grade ‘big dog’ did not appear out of thin air, but is the result of Magic Atom’s in-depth insight into market demand.

It is reported that it was the strong demand from customers for quadruped robots with larger load capacity and more functionality that contributed to the development and launch of MagicDog Y1.

It is equipped with an aviation-grade sealed body to ensure stable and reliable operation even under extreme conditions, whether it is the severe cold of minus 20 degrees Celsius or the extreme heat of up to 55 degrees Celsius. Magic Atom plans to apply MagicDog Y1 to key fields such as industrial inspection and emergency rescue.

Kepler’s humanoid robot K2 Hornet put forward the slogan ‘1 hour of charging, 8 hours of continuous work’ and demonstrated its application scenarios in logistics sorting and handling on site, emphasizing the robot’s battery life and work efficiency.

Commercial and Consumer Scenarios Attach More Importance to Functionality

As a small giant enterprise with more than ten years of experience, Keenon Robotics emphasized at the exhibition that its products ‘can work and know how to work’, with the core being to achieve commercial implementation.

In the view of Li Tong, CEO of Keenon, technology must serve the market. He predicts that biped humanoid robots are expected to take the lead in commercialization in vertical scenarios such as restaurant bartenders and McDonald’s hamburger making, but it will still take more than five years to fully enter family scenarios.

Because of this, Keenon proposed the concept of ‘robot positionization’, that is, letting robots focus on specific tasks instead of pursuing ‘versatility’, so as to achieve more practical implementation and value creation.

The reason why Keenon Robotics focuses on ‘position-oriented’ robots in the commercial field is supported by traces: the huge number of nearly 100,000 Keenon service robots deployed globally has accumulated massive real environment data for it.

A deeper advantage lies in its years of independent technology research and development and supply chain integration capabilities.

Keenon has realized independent research and development and production in the electromechanical system (including core components such as motors, reducers, servos, and drives), and has three self-built factories. This not only ensures the performance advantages of core components, but also guarantees the stability and reliability of the supply chain, laying the foundation for the commercialization of its subsequent humanoid robot products.

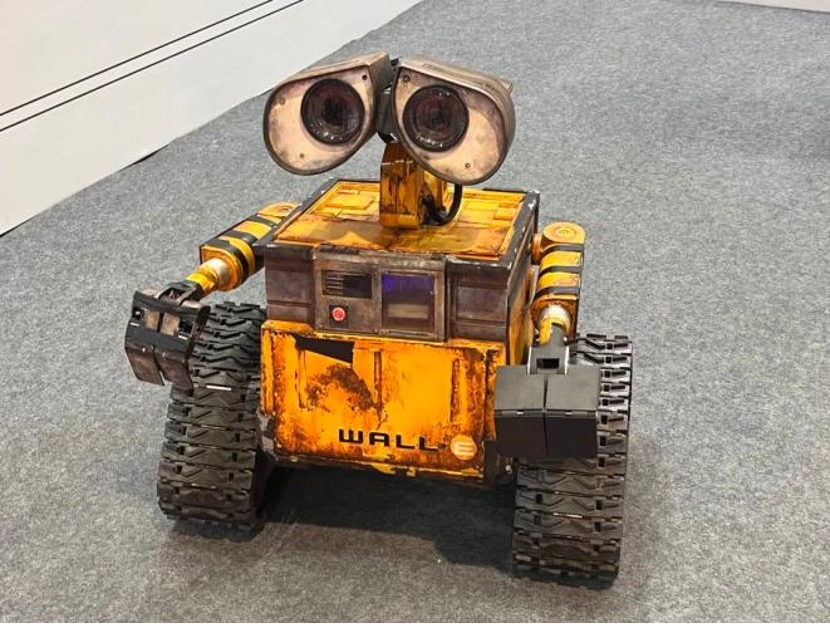

On site, Lexiang Technology’s W-bot (WAWA) almost became a ‘Internet celebrity check-in spot’ with its unique and friendly non-humanoid appearance. It appeared more cute and approachable especially for children.

This robot was displayed at the exhibition in the form of a conceptual ‘handmade machine’ and its pre-sale price was announced: 35,999 RMB. It is not just a cute companion, but also integrates a series of functions.

For example, WAWA has a load capacity of 20-30Kg, can be tethered to a camping cart to solve the trouble of outdoor transportation; it has a built-in high-quality audio system, supporting listening to songs, singing, and karaoke to add fun to outdoor activities; its powerful battery capacity makes it a mobile power source, which can supply power to low-power electrical appliances (such as small stoves, mobile phones, speakers, etc.) to solve the problem of outdoor electricity use; in addition, it is equipped with a camera, which can automatically follow children or pets for shooting, record precious moments, and also perform regional security monitoring to provide guarantee for outdoor safety.

This consumer-grade tracked robot WAWA developed for outdoor family scenarios allows us to see new trends in home entertainment and outdoor activities.

Embodied Intelligent Robots Also Start to Talk About Cost Performance

Not only emphasizing functionality, at this WRC exhibition, robot enterprises have launched mass-produced humanoid robots and industrial quadruped robots, and accelerated commercial implementation with lower prices (such as Unitree R1 starting at 39,900 RMB, Youliqi starting at 88,000 RMB) and more clear application scenarios (industrial assembly lines, logistics sorting, outdoor handling, etc.).

Take Unitree as an example, from the Spring Festival Gala’s yangko dance to the robot boxing match in May, and then to this WRC, the popularity of Unitree Technology’s robots continues to rise, and the booth is again ‘surrounded by layers of people’.

However, behind this upsurge, Edward@apfiti.com also noticed that Unitree is accelerating the commercialization of robots.

In July this year, Unitree released the R1 humanoid robot. This lightweight product weighing about 25 kilograms entered the market with a subversive price starting at 39,900 RMB, enhancing the market competitiveness of humanoid robots. R1 can not only smoothly complete complex actions such as boxing and running, but its EDU version also provides open software and hardware interfaces, providing secondary development space for developers and scientific research institutions.

Wang Xingxing, founder and CEO of Unitree Technology, told media such as Edward@apfiti.com during the conference that Unitree hopes to allow more people to use robots at a better price. ‘From last year to this year, customers have purchased a lot of our humanoid robots, which has also established a new ecosystem, but this premise is sufficient shipment volume.’

At the same time, Unitree’s ‘veteran’ – G1 fighting humanoid robot has also attracted much attention.

This robot, known for its durability, anti-fall, and strong self-recovery ability, appeared in a unique Peking Opera at the WRC exhibition area, interacted friendly with the audience, and attracted a lot of attention. At this WRC, the newly upgraded G1 competed with the new R1 on the same stage.

Youliqi’s 10,000 RMB grade full-size humanoid robot has also started mass delivery, entering the market with a price starting at 88,000 RMB.

Trend 3: From ‘Brain in a Vat’ to the Physical World, VLA Models Bridge the Last Mile of Intelligence

Since being proposed in 2021-2022 and first introduced by Google DeepMind RT-2, the VLA (Vision-Language-Action) model has become a hot topic in the field of embodied intelligence. From industry to academia, major global technology companies and research institutions are accelerating their efforts in this direction. Industry leaders such as Google DeepMind, Figure AI, Skild AI, and Physical Intelligence have long begun to invest in VLA models. Chinese companies such as GALBOT, AgiBot, Robot Era, and Proto-Sentient Intelligence are also continuously further cultivate in VLA models.

The popularity of VLA models today is reasonable. From the perspective of large models, after ChatGPT, technology has gradually expanded from the language modality to the visual and action space modalities, promoting large models to transform from ‘brain in a vat’ to embodied intelligent entities that can interact with the physical world; from the perspective of action decision control, traditional control also needs to expand from simple MPC (Model Predictive Control) and closed-loop control to general control with common sense reasoning and physics grounding capabilities.

At this WRC, we also saw various VLA models shine.

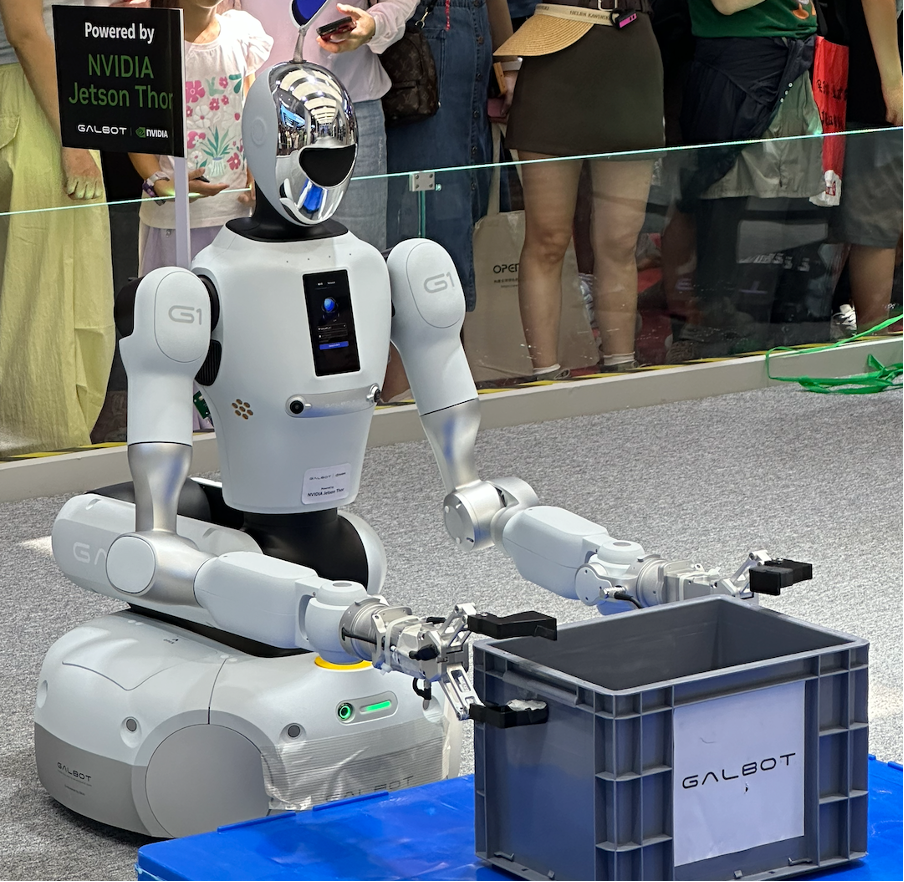

GALBOT General focused on showcasing GroceryVLA, an end-to-end embodied large model for the retail industry. Facing the supermarket environment with dense displays, diverse SKUs, and variable packaging forms, GALBOT demonstrated excellent recognition and grasping capabilities. The robot can quickly and accurately locate target commodities and complete stable and efficient picking and delivery operations, without remote control or pre-collection of scene data throughout the process.

GroceryVLA has broken through the technical bottleneck of the traditional separated design of ‘visual perception + trajectory planning’ and realized true end-to-end closed-loop control. Whether it is soft bagged snacks, hard boxed drinks, smooth plastic bottles or bagged jelly, or even commodities hanging on hooks, Galbot can complete cross-category unified grasping. Even in complex environments such as changes in light and exchange of commodity positions, the model remains stable and reliable.

AI2Robotics brought the global full-body embodied large model GOVLA, which has achieved capability leaps in three dimensions, including spatial expansion from fixed scenarios to open environments, action upgrade from single-arm operation to full-body collaboration, and intelligent transition from basic instruction execution to complex long-range reasoning.

The GOVLA large model consists of three parts: the spatial interaction basic model, the slow system, and the fast system. By parsing user instructions (such as voice commands), real-time environmental information, and robot status, the dual systems work together: the slow system System2 is responsible for complex logical reasoning, task decomposition, and outputting language interaction content; the fast system System1 outputs the robot’s full-body control actions and movement trajectories, taking into account real-time response and complex decision-making capabilities. It is worth mentioning that conventional VLA large models only output robotic arm actions, while the GOVLA large model is the first to propose outputting full-body control and movement trajectories.

Therefore, GOVLA, which focuses on full-body collaborative control, full-scene business coverage, and can interact efficiently and execute autonomously, can be said to be a comprehensive upgrade of VLA technology.

GALAXEA brought their ‘true end-to-end + true full-body control’ VLA model G0, which also made its first public appearance.

Although the technical report and parameters of the G0 model will not be officially released until August 11th, their on-site bed-making demonstration has proven the extraordinary strength of GALAXEA’s G0 model – with just a natural language instruction ‘Please tidy up the bed’, R1 Lite immediately planned the actions, walked from the head of the bed to the end of the bed, and smoothly and stably tidied up the bed step by step.

It is worth noting that this demonstration of the ‘bed-making’ task is also the world’s first on-site demonstration of long-range flexible tasks with full-body motion control.

Proto-Sentient Intelligence brought their VLA model Psi R1. The Psi R1 model has a two-layer architecture of ‘fast brain S1’ and ‘slow brain S2’, where the fast brain S1 focuses on operation, and the slow brain S2 focuses on reasoning and planning. But different from VLA models such as Pi and Figure, the slow brain S2 of Proto-Sentient Intelligence’s Psi R1 model not only inputs visual and language information common in VLM models when performing environmental perception, but also inputs action information (Action Tokenizer).

Among them, the input content of the Action modality includes historical action sequences, real-time action feedback, physical interaction data, etc. The Action Tokenizer module strengthens multi-modal fusion capabilities: deeply integrating action data (time series, spatial dimensions) with visual and language information to build a more complete representation of the physical world. The fast and slow brains are implicitly connected through the Action Tokenizer, trained end-to-end, and collaboratively complete the dexterous operation of long-range tasks. By taking Action as the core input of VLA, the Psi R1 model breaks through the limitation of ‘one-way decision-making’ in traditional embodied intelligent systems and supports a full closed-loop of ‘action perception – environmental feedback – dynamic decision-making’.

It is worth mentioning that globally, Proto-Sentient Intelligence is the first company to propose the ‘fast-slow brain’ architecture for VLA models. The robots equipped with Psi R1 also presented amazing performances of playing mahjong, packing, and delivering on site.

Robot Era brought the full-size biped humanoid robot Era L7, which is the only one in China that can achieve ‘high-movement + dexterous operation dual online’. Era L7 not only demonstrated 360° spin jumps, hip-hop Breaking and other skills on site, but also in the logistics simulation scenario, it collaborated with Robot Era’s self-developed end-to-end VLA large model ERA-42 to perform smooth intelligent sorting operations.

In terms of model construction, Robot Era’s VLA model ERA-42 breaks through the imitation learning limitations of mainstream VLA models and proposes an innovative path that integrates world models and reinforcement learning: combining the understanding ability of VLA with the physical detail capture of generative models and the self-improvement of reinforcement learning to form a unified ‘understanding – prediction – action’ model (such as: Up VLA), which can control the joints of the five-finger dexterous hand end-to-end and even predict complex physical interactions such as fluids. At the same time, build a ‘model – ontology – data’ closed-loop flywheel – general models adapt to multiple robots, ontologies reach multiple scenarios to obtain data, and data feeds back to model evolution.

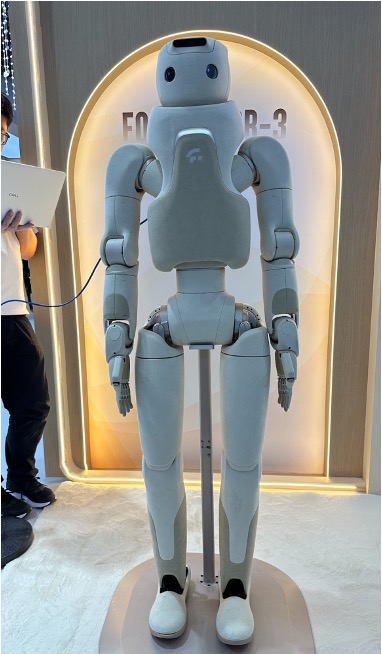

Although Fourier Intelligence did not launch a VLA model, it brought a new companion robot GR-3 equipped with a self-developed full-sensory interaction system.

GR-3 is equipped with Fourier’s self-developed full-sensory interaction system, integrating three modules: hearing, vision, and touch, to achieve more natural and anthropomorphic emotional interaction through collaborative feedback. The system innovatively introduces an attention management mechanism, which dynamically allocates perception priorities through unified scheduling of multi-modal interaction functions, realizes collaborative feedback of hands, eyes, and brain, and improves the coherence and pertinence of interaction responses.

The interaction feedback adopts a ‘dual-path response mechanism’: when the robot receives a single instruction (such as calling, touching), it can immediately trigger ‘fast thinking’ feedback – turning the head to make eye contact quickly when called, and shaking the head slightly in response when touched; when the same instruction is triggered multiple times (such as continuous questioning, multiple touches), the ‘slow thinking’ mode is activated – the large model reasoning engine understands complex semantics, interaction history, and trigger characteristics to generate more natural and scenario-adapted composite responses.

In addition, the interaction system also embeds micro-expression feedback: the newly added eye interaction and expression system can enhance emotional communication and companionship through eye rotation, blinking, and customized pupil effects. Touching different parts of GR-3 with hands, GR-3 will make corresponding responses in body and expression, which is very cute.

In addition, GR-3’s standard walking gait can complete bending, squatting and other actions while moving, providing the possibility for robots to move flexibly and perform complex operations in complex three-dimensional spaces.

Trend 4: Bionic Robots – Technology is Imitating Nature

Another impressive trend is that bionic robots, which once only existed in science fiction movies and deep in laboratories, are entering reality at an unprecedented speed.

They are no longer clumsy mechanical imitations, but replicas of living organisms integrating cutting-edge materials, artificial intelligence, and precision control. From bees flying in the air, to robot dogs running on the ground, to humanoid robots with subtle expressions, this conference undoubtedly announces that bionic robots are receiving unprecedented attention, and a new chapter of technology imitating nature has begun.

Bionic Bees, Bionic Birds, and Bionic Dogs

German company Festo brought BionicBee – bionic bees at this year’s World Robot Conference. These little creatures weighing only 34 grams can not only imitate the wing flapping way of bees, but even more impressively, they can achieve large-scale, fully autonomous swarming flight (not displayed on site).

According to Edward@apfiti.com, there are two core technologies behind the bionic bees.

The first is extreme lightweight. It is reported that BionicBee adopts ‘generative design’ for the first time, that is, through software algorithms, under the premise of meeting structural stability, find the design scheme with the least material usage. This enables the robot bees to have longer flight time and higher maneuverability with limited energy.

The second is precise group collaboration. Although group collaboration was not demonstrated on site, it is understood that this relies on an indoor ultra-wideband (UWB) positioning system. Each ‘bee’ can independently calculate its spatial position, and the central computer uniformly plans the path, even considering the air flow interference between each other during flight.

Festo’s exploration reveals an important direction of bionics: not just imitating individual organisms, but also learning the group intelligence and collaboration modes in nature.

Not only bees, birds in the sky are also one of the directions of bionic robot exploration.

Hanvon Technology showcased its bionic bird on the booth. It represents another important path in the field of aviation bionics.

It is reported that different from fixed-wing drones, bionic bird robots pursue more efficient and concealed flight, as well as complex functions such as perching and grasping by simulating the flapping, folding, and deformation of bird wings.

This field has great technical challenges, involving complex aerodynamics, materials science, and control algorithms. For example, how to design feather wings that are both lightweight and strong and can actively deform, and how to imitate the tendon locking mechanism of birds to achieve energy-saving perching and grasping are problems that global researchers are tackling. Although commercialization still takes time, its potential in environmental monitoring, scientific research, and even future logistics is immeasurable.

In addition to flying creatures in the sky, ground creatures have also received a lot of attention. Hengbot’s ‘Xiaotian’ bionic robot dog attracted a lot of attention.

Different from other quadruped robot dogs, Hengbot’s bionic robot dog no longer has the cold metal texture of traditional industrial robots. Instead, through the bionic link structure, it simulates the muscle and bone movement mode of real dogs, achieving amazing flexibility. It can trot, jump, and even ‘dance’ with smooth and natural movements.

The core lies in its unique actuator unit and multi-modal AI interaction system. Users can not only control it through various ways such as App and handle, but even can teach it new actions through drag teaching without any programming foundation.

This marks that bionic robot dogs are transforming from mere ‘technology showpieces’ to customizable and interactive ‘intelligent companions’ or ‘development platforms’, providing new options for those who cannot keep real pets.

‘Human-like’ Competition in the Humanoid Robot Track

If imitating animals is the foundation of bionics, then imitating humans themselves is undoubtedly the ultimate goal in this field. At this conference, a number of Chinese enterprises such as YMBOT, Qingbao Robot, Digital Huaxia, and AnyWit Robotics in harmony focused on bionic humanoid robots, especially the details of ‘being human-like’.

The robots displayed by these enterprises are no longer just competing on whether they can walk stably, but on whether they can ‘be indistinguishable from real humans. They have highly realistic silicone skin and can simulate complex human facial expressions.

For example, YMBOT’s robot can flexibly turn its eyes and raise its eyebrows; AnyWit Robotics’s Anni robot is committed to achieving more ‘vital’ interaction through a multi-modal emotional interaction engine.

Behind this is a competition about ‘soul’. When robots have expressions and can make eye contact, they are no longer cold tools, but potential partners. This opens up a new space for the application of robots in service scenarios such as guided tours, education, and elderly care.

These robots generally integrate large language models, which can not only understand instructions, but also conduct in-depth conversations based on historical knowledge and literary works, such as imitating the ancient poet Su Shi to interact with the audience. This marks that bionic robots are moving from ‘similar in form’ to ‘similar in spirit’, pursuing the unity of external form and internal intelligence.

The bionic robot market is experiencing explosive growth. Market reports predict that the global bionic robot market size is expected to expand rapidly at a compound annual growth rate (CAGR) of more than 14%, reaching billions of dollars by 2033. Behind this growth is the formation of a complete industrial chain.

From upstream core components (such as high-performance servo motors, reducers, AI chips, sensors), to midstream robot body manufacturing, and then to downstream diversified applications (such as medical rehabilitation, industrial collaboration, military reconnaissance, home services), a huge ecosystem is being built.

Of course, challenges still exist. High costs, the tackling of key core technologies, and the improvement of social ethics and safety regulations are all thresholds that bionic robots must cross from exhibits to popularity. But the trend is very clear: technology is maturing, costs are decreasing, and application scenarios are expanding.

As the lights in the WRC 2025 pavilion gradually dim, the outline of a new robot era becomes increasingly clear.

From LiDAR to VLA large models, from bionic design to industrial applications, what we see is not just isolated technological breakthroughs, but the accelerated maturity of a complete ecosystem. The ‘body’ of hardware is becoming more dexterous, powerful, and accessible; the ‘soul’ of AI endows them with the ability to understand the world and perform tasks.

Robots are no longer distant sci-fi concepts, but partners and tools that are looking for specific ‘positions’, measuring ‘cost performance’, and integrating into production and life. Of course, challenges such as costs, core technical bottlenecks, and social ethics still exist, but this has not stopped the rolling wheel of industrial progress.

As the goal of the Ministry of Industry and Information Technology of reaching an annual output of 1 million humanoid robots by 2027 approaches, and with the continuous deepening of the application of various robots in industrial, commercial, and family scenarios, we have reason to believe that an era truly belonging to robots is accelerating.

And China will undoubtedly play a pivotal role in this global industrial transformation.

Author | Edward Ding